Off The Charts: D3 and the Future of Data Visualization

Posted by dave on December 7, 2011 Off The Charts

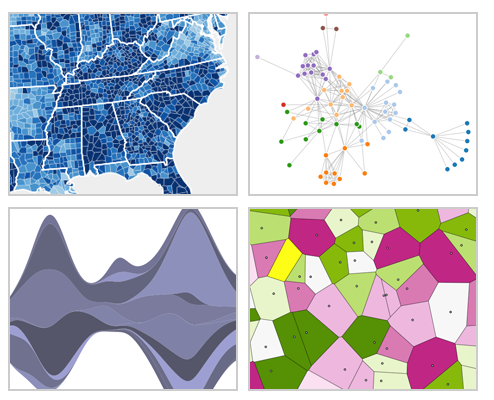

Recently, we had the opportunity to chat with data visualization expert Mike Bostock. In addition to authoring the JavaScript libraries Protovis and D3, Mike has recently assumed the role of visualization scientist at Square. There, he helped develop Square’s new open source visualization system, Cube. We talked with Mike about his work in the field, the stories behind D3 and Cube, and his thoughts on the state of data visualization today

We’ve read a bit about the history behind D3, the JavaScript library you authored. But we were hoping you could go into more detail. What motivated you to move on from your previous project, Protovis, and create D3?

[Editor’s Note: You can watch Mike’s presentation on this issue at W3Conf]

I’d say the motivation was to stop reinventing the wheel. The goal of D3 is to embrace and build on standard, universal representations of graphics, rather than reinvent them each time. During my work with Protovis, I got asked for gradients, pattern fills, dashed strokes, any number of other features. And with Protovis, we reached about 80% of the expressiveness you need. The remaining 20% constituted a long tail of graphical features that would be tedious to support.

In contrast, with D3, the idea is to leverage the native representation - what your browser understands through the Document Object Model (DOM). This way, the toolkit isn’t a middleman, preventing you from using browser features. D3, in other words, builds on the underlying technologies without an intermediate, proprietary layer.

Where does development of D3 stand now? What are you working on?

Right now, I’m writing a book on D3. I believe a lot can be done to teach people the core concepts. The nice thing about building on top of these standards rather than reinventing them each time is that you can create this mature product without tons of work. The core functionality of D3 is (relatively) complete. But then there’s all this other stuff I want to build on top of that core. For example, the axis component and brush component are high level abstractions that can be added because the kernel has already been built.

I’d say the motivation was to stop reinventing the wheel. The goal of D3 is to embrace and build on standard, universal representations of graphics, rather than reinvent them each time.

What do you think of GGPlot as a charting abstraction?

I think it’s a great approach to concisely express whatever view you want to construct. It doesn’t work as well in terms of interaction. You end up constructing static views. I love the fact that it adds legends, axes, labels and other elements automatically. The challenge would be to take that visual grammar and create something interactive that allows you to easily switch between different views and filters.

There’s been some discussion on the mailing list of building chart abstractions on top of D3. I’ve been going back on forth on some good ways a chart should be designed. But I’m more interested in the lower level stuff. One of the central tenets of D3 is that it’s not a charting library. You can however, use D3 to create your own chart types.

What other frameworks do you like?

I tend to use low-level graphics frameworks, or just the standards directly, rather than visualization frameworks. I’m a fan of Processing and three.js. Processing is quite popular for visualization, and I’m very impressed by those music videos in three.js.

Can you tell us a bit about Cube, the open-source system that you helped develop for Square?

Cube is an application specific to time series visualization. Unlike D3, it comes with a whole lot right out of the box, so you can construct visualizations without writing code. You can also write your own Mongo queries if you want to do different types of analysis. We’re using it for custom visualization at Square, and it provides a central repository for various data we want to analyze. The great thing is that you can take different types of metrics and see them in one place, for example counting support tickets relative to the number of payments processed.

Do you have to translate your data to a Mongo store?

You can write these things called “emitters” that send out time-stamped events. By sending data to Cube, you’re in fact importing it to MongoDB.

One of the main elements of change has been the web, which has allowed much more by way of interactive and dynamic visualizations. Now, everyone can be connected to data in real time, as browsers support extremely fast rendering and interactive displays.

Before we let you go, we’d love to get your thoughts on the state of data visualization today. Particularly, have you noticed any significant changes in the field in the past few years?

One of the main elements of change has been the web, which has allowed much more by way of interactive and dynamic visualizations. Now, everyone can be connected to data in real time, as browsers support extremely fast rendering and interactive displays. When the field of data visualization got started, access to interactive displays was limited to research labs. That’s all changing now.

You mentioned interactive visualization as being an emerging trend. Do you have other thoughts on where the field is heading?

There are two underlying trends that describe where things are headed. The first part is the browser as an interactive platform. This means you can now show interactive visualizations and allow people to change views and do filtering in real time, rather than having to install an application on their desktop. The second part is that your browser is connected to the web, which opens up access to big, live data sets. Any visualization (if it makes sense, of course) can be a live one.

Finally, with all the visualization libraries available at the moment, do you see any gaps in the landscape that still need to be filled?

I think there’s a need for more data processing and statistics tools in JavaScript. But one of the nice things about the JavaScript stack is that the code can live either on the client or the server. If you are going to send all the data to the client, you might want client-side tools to do aggregation and analysis. On the other hand, if you want to reduce the data before you send it out, you will be doing work on the server side (for example, there are lots of tools built on Hadoop and MongoDB’s Mapreduce functionality).

For doing interactive modeling or exploration of data, it’d be nice to have more of those tools in JavaScript implementations, so that you can do the work in the client.

Related Content

-

Startups, Data and Fundraising - an Interview with Amanda Kattan

Amanda Kattan data and finance consultant -

Data for Good with Aziz Alghunaim, Co-founder and CTO of Tarjimly

Aziz Alghunaim Co-founder and CTO Tarjimly -

An Interview with Daniel Mill of The New York Times

Daniel Mill Director of Marketing Analytics The New York Times