Roundtable: Amazon Redshift Best Practices for BI, Part 2

Posted by on August 6, 2015 Technology,

This is a continuation of last week’s post on Amazon Redshift best practices.

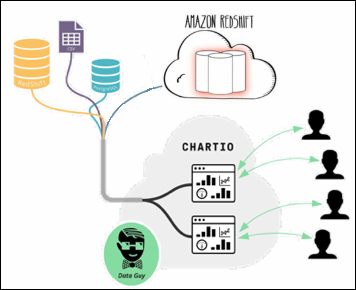

Earlier this month, Chartio hosted a roundtable on best practices for Amazon Redshift. Since many of our customers users store their data on Redshift, we wanted to bring together a panel of users with expertise in using Redshift for business intelligence applications. We were able to assemble a great team:

- Tina Adams, Product Manager at Amazon Redshift

- AJ Welch, Data Engineer at Chartio

- Nathan Leiby, Software Engineer at Clever

- Marc Bollinger, Engineering Manager of the Analytics Team at Lumosity

We held the roundtable at a meetup at WeWork in SOMA in San Francisco. It was moderated by Chartio’s VP of Growth, Jordan Hwang. You can also watch the video of the roundtable if you prefer.

Tina Adams, AJ Welch, Nathan Leiby, and Marc Bollinger

What’s your approach to schema design?

Tina: I usually tell customers to start with whatever schema they’re already using. Many of our customers do different things, very performantly, so we see star schemas.

A star scheme is great in some ways, but the downside is that you have to do a lot of joins and Redshift can do those very well if you tune it properly. We have something called a distribution key. For example, I can distribute my data on customer ID and when I do a join on customer ID, all the data is co-located on the same node. So you don’t have to pull data from another node to do your join.

But, that might not always work for you, because you join on different things, so Redshift’s default is to try to balance out the cluster and distribute evenly. So if you don’t specify a distribution key, we’ll do an even distribution across all the nodes, which can work very well too.

So it depends on what you’re doing. But if you have a star schema, we’ve written a detailed blog post about running star schemas on Redshift.

If you don’t have a sort key, and you haven’t done things like vacuum your tables when you’ve added new data, you may do a filter and all of a sudden it takes forever because you’re scanning billions of rows to find stuff in the last day.

Distribution keys and sort keys are also critical. I see customers who don’t have sort keys, so they have performance issues. The problem with that is we don’t have a traditional index in Redshift at all.

We use zone maps, based on your sort key. We have metadata, for every single block on disk that tells us what the min and max values are for that block. So, when you filter on it, Redshift doesn’t have to scan the whole database to find it.

But if you don’t have a sort key, and you haven’t done things like vacuum your tables when you’ve added new data, you may do a filter and all of a sudden it takes forever because you’re scanning billions of rows to find stuff in the last day.

Redshift can deal with a lot of different schemas but as long as you do your sort key and your distribution key properly.

We have an interesting new feature called Interleaved Sort Keys. With interleaved, you can choose any of those keys, or only one of them, and scan across your data performantly. (Chartio’s AJ Welch is working on a blog post about Interleaved Sort Keys. Subscribe to our blog to be notified when it’s online.)

Nathan: As I mentioned, a lot of our data comes over in a pretty familiar schema we designed within Mongo to describe educational data. It’s fairly relational in nature. So, we try to use things like sort keys but we don’t really change how the actual data is structured in Redshift.

We do have to deal with some issues, such as flattening fields that came from Mongo, but it hasn’t really been an issue for us, because our data is mostly an array of many strings, and you can just search on it. But I imagine we could do more post-processing if we needed to make that data more queryable.

Sort keys are essential for events. We’re inserting in timestamp order, so it’s pretty obvious to sort on the timestamp.

Marc: I don’t have really too much interesting to say here. We’re mostly relational and then we have lots of roll-ups. The roll-ups are usually by user, by platform, and a few other columns. A lot of our information is dates and time stamps for sort keys and then distribution keys are using user IDs.

We’re probably going to move a lot of our preprocessing and staging to Kafka, which will allow us to do better partitioning.We do some partitioning in Hadoop, and we basically have the user events go over here and non-user events go over here. Once we do that, there’s more we can do with distribution keys and sort keys. I’m really excited about those composite sort keys that I did not know about before.

We do a lot of pre-aggregation but it’s fairly straightforward. Most of our workflow is aggregations that are just number of engagements of a particular type per user. A lot of our stuff is user and date related, so that maps pretty well too with like sort keys and distribution keys.

AJ: I’ll add one thing that’s less to do with table design and more about thinking of the database as a whole. Historically, the star schema was the only thing you’d write to the database and make available to your users. But designing that star schema takes an upfront investment. If you’re just starting out, it makes a lot of sense to get your data in there as fast as possible and just iterate on it. There’s actually a great 2009 paper from the Berkeley Database Research Group.

They examined how to design a data warehouse that serves not only business users, but data scientists doing complex analyses. How do we structure this database so that these users, with very different needs, don’t get in each other’s way? They put forward the idea of having four separate schemas.

At the base, there’s a staging schema that you’ll give to your analysts. Your analysts have the skills to work with the raw data, understand how Redshifts works and want the data ASAP.

The next layer up is the production schema – your most widely used schema. That’s for your business analysts: people that know SQL, know Chartio, and they’re going to be in there every single day. It’s a more refined schema. Maybe it is a star schema; maybe it’s slightly aggregated.

At highest level is the reporting schema. It’s the most aggregated layer. It’s updated maybe monthly or weekly. These are reports that you’re generating for executives or management, not for business analysts.

With this design, each user gets their own view of the database. If someone only needs the reports, then they only see that. If someone needs to go down a little bit deeper, then they get access to the production schema. Skilled data analysts get access to everything.

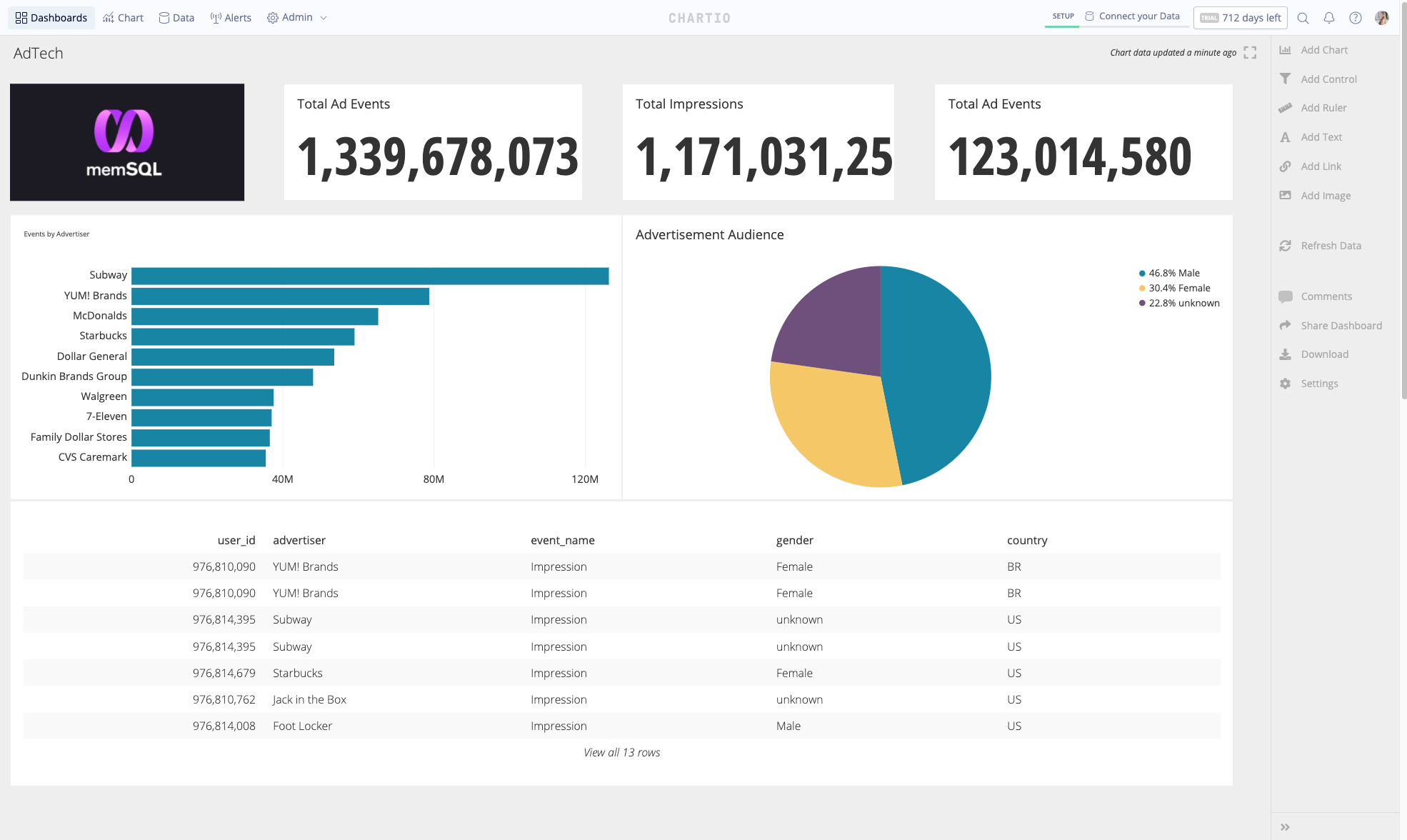

We see that as a good model to scale up, and Chartio can help with that. We just released custom schemas that can actually help you build that type of structure in Chartio without having to create those separate schemas in Redshift. You can hide tables, and you can create schemas that are specific to certain terms. You can create derived views and calculated columns. And you can do it directly in your BI tool.

If you have a lot of users, a lot of tables, how do you figure out where the problems are?

Tina: We actually have a really rich set of system tables that can tell you almost anything you need know. The issue is figuring out where they are and how to use them, and so we have a utility on GitHub that has a bunch of queries that our database engineers put together. That will tell you important things such as which of my tables are unsorted, what are my slowest queries, and what tables are they querying on. I highly recommend using it.

What’s one of your favorite analysis that Redshift allows you to perform?

Nathan: Using Redshift with Chartio gives Clever the ability to have everybody in the organization taking advantage of SQL. Even people who are on our support and business teams. That’s probably the greatest thing.

Chartio supports Google Analytics as one of its back ends, so we can use Chartio to join data from our Mongo with data from Google Analytics.

The exciting part about analytics at Clever is that we interact with a lot of end users in schools who have their data in legacy systems. Giving them access to interesting data is huge.

There might be a math app that many students are using. We can show principals information about this they just haven’t been able to see before. This enables Clever to add value to their data.

Marc: Beyond the fact that Redshift has allowed us to do crazy stuff that probably we should be doing elsewhere, a lot of our analysis has been revolutionized in Redshift. Chartio has helped us a lot with that.

But in terms of our favorite analysis, we have on the order of billions of game plays in MySQL, this was just a pain in the ass to access. In Redshift, we just came up with this crazy time series query that allows you to batch and find out all the sequential stuff.

Also, I don’t think we’ve mentioned window functions, but they’re awesome. I use them all the time.

Don’t miss Part 1 of our Redshift best practices roundtable.

For more information on using Amazon Redshift with Chartio, see our white paper Optimizing Amazon Redshift Query Performance.