Building a Data Stack: An Overview on Amazon Redshift

Posted by on December 15, 2016 Data Governance

Historically, data warehouses were clunky systems that took up physical space, needed a white-glove installation and required a team of database administrators to maintain the system.

Today’s data warehouses are cloud-based, incredibly fast and more cost effective than legacy systems. Cloud-based systems are more appealing to a wide range of businesses from SaaS to Fortune 500 companies. Of the cloud-based data warehouses, Amazon Web Services (AWS) pioneered the movement and refocused public perception with Amazon Redshift.

Since launching in February 2013, Amazon Redshift has been one of the fastest-growing Amazon Web Service (AWS) offerings. Notedly, Amazon Redshift is based on PostgreSQL 8.0.2 and technology created by ParAccel, a database management system designed for advanced analytics for Business Intelligence. Since its launch, Amazon Redshift has added more than 130 significant features making it cloud-native data warehouse that’s different than ParAccel.

In this blog post, we’ll provide a high-level analysis pertaining to seven important functionalities and capabilities to Amazon Redshift’s data warehouse. Whether you’re evaluating data warehouses for the first time, performing a competitive advantage or looking for a cloud-based solution, you can use this as a reference point. As a high-level analysis, we’re focusing around the key areas of performance, operations and cost, as we believe these are the crucial elements for your evaluation process.

Amazon Redshift Overview

In choosing a data warehouse, it’s best practice to choose one that fits into your business needs, integrates well into your data infrastructure and will scale alongside your company. With that, many of today’s cloud-based offerings will fulfill the aforementioned requirements. Here’s a quick look at Amazon Redshift:

-

A fully-managed petabyte-scalable systems

-

Leverages parallel processing

-

Leverages columnar storage

-

Geared towards interactive reporting on large data sets

-

Supports integrations and connections with various applications, including Business Intelligence tools

-

Written in standard SQL based off of PostgreSQL 8.0.2

Performance: Throughput and Concurrency

Rather than analyzing feature by feature of Amazon Redshift’s performance features, we’re focusing our analysis on throughput and concurrency, as these are the major bottlenecks when companies look into interactive reporting for the voluminous data set.

Throughput

Throughput is the speed at which a data warehouse can perform queries. For those evaluating a data warehouse, throughput is a key area of consideration because customers want to ensure that they’re investing time, resources and money into a system that will adapt and perform to their needs.

Amazon Redshift uses a distributed columnar architecture to minimize and parallelize the I/O hurdles that many traditional data warehouses come up against. Within the Amazon Redshift system, each column of a table is stored in data blocks with the goal of reducing I/O so only relevant data is retrieved from disks. Amazon Redshift is able reduce I/O through:

-

Column storage fetches data blocks only from the specific columns that are required for queries

-

Customers typically get 3 - 5X compression, which means less I/O and more effective storage

-

Zone maps: Each column is divided into 1MB data blocks; Redshift stores the min/max values of each block in memory and is able to identify the blocks that are required for a query

-

Direct-attached storage and large block sizes (1MB) that enable fast I/O

With the release of Dense Storage (DS2) in June 2015, it allows for twice the memory and compute power of its predecessor (DS1) and the same storage capacity at the same cost, which leads the way for overall improvements to Amazon Redshift. Since this release, Amazon has worked to improve Amazon Redshift’s throughput by 2X every six months. Additionally, Amazon Redshift has improved vacuuming performance by 10X.

For those working with a wide variety of workloads and a spectrum of query complexities, this is a welcome improvement to the Amazon Redshift system.

Concurrency

Every data warehouse has concurrency limitations, or the maximum number of queries you can run simultaneously without leading to slowness in generating interactive reports. Having said that, you may come up against issues around concurrency when democratizing data access for users who explore data within pre-existing dashboards via a Business Intelligence tool. That, coupled with the data warehouse simultaneously having to ingest new data streams for reporting can be taxing on the overall system.

While this may seem like a simple maneuver each of these can easily generate a large number of queries with varied resource requirements, thus running up against the concurrency limitations and a lag in generating reports.

As an Amazon Redshift administrator, in the Management console you’re able to set the concurrency limit for your Amazon Redshift cluster. While the concurrency limit is 50 parallel queries for a single period of time, this is on a per cluster basis, meaning you can launch as many clusters as fit for you business. It’s important to note that you’re able to also have a maximum of 500 concurrent connections per cluster. Meaning, queries from up to 500 users will get executed with up to 50 queries at any given time.

Having said that, Amazon’s documentation recommends only running 15 - 25 queries at a time for optimal throughput. Additionally, Amazon Redshift allows users to adjust their Workload Management (number of slots/queries, queues, memory allocation and time out) to best address their needs.

This is an important aspect of Amazon Redshift’s ability to provide interactive reports to its customers. Whether your company is enabling data exploration by allowing business users to run their own queries via an interactive BI tool or you’re fielding ad hoc query requests, it’s crucial to understand Amazon Redshift’s concurrency limitation as this will impact the speed at which you receive results.

Conclusion

At the end of the day, throughput and concurrency impact one another and both impact the overall performance of a data warehouse. For users, they all want the same thing: a highly performant data warehouse that will meet their needs and allow everyone to access data.

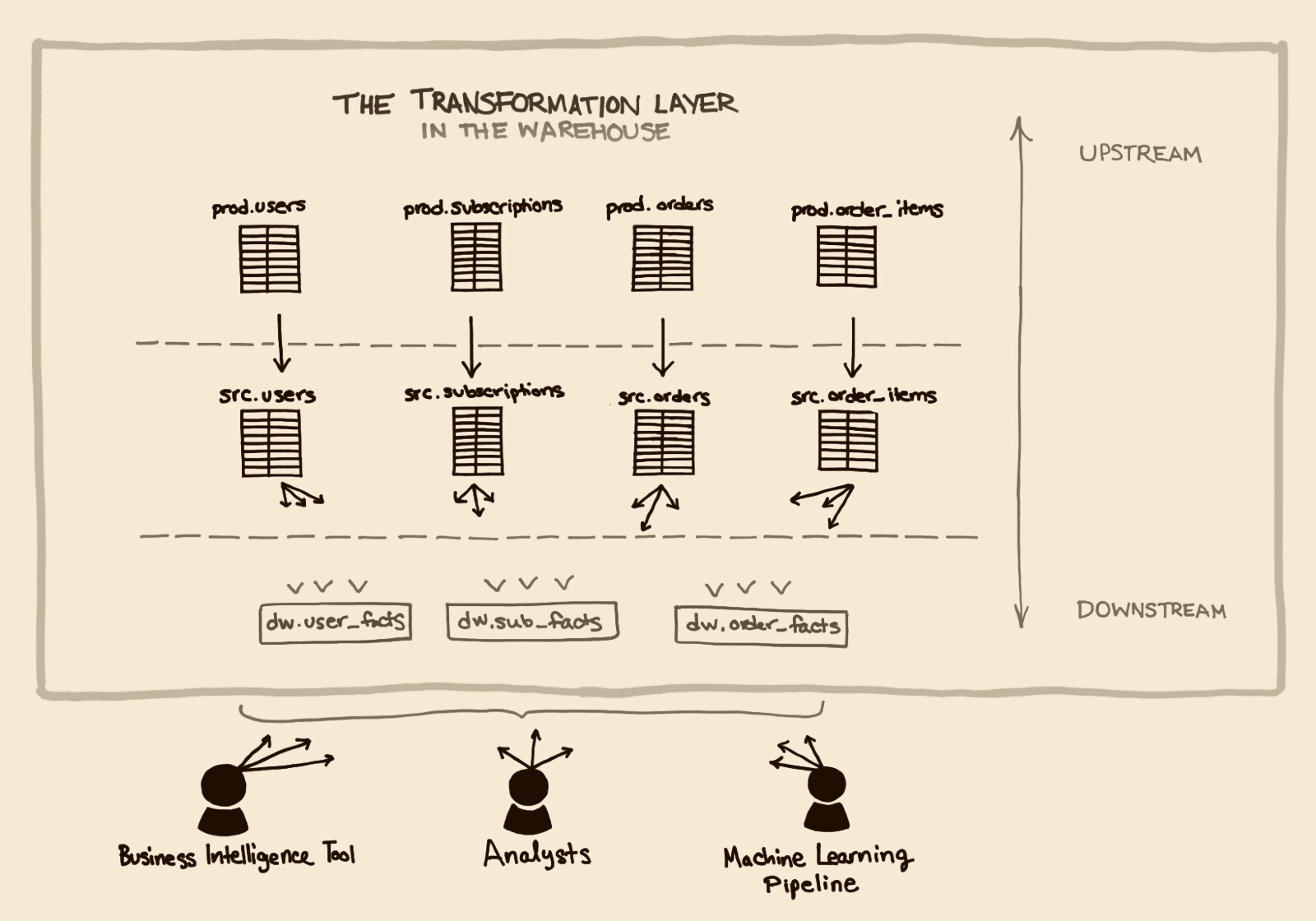

In enabling everyone to access data, it generates questions, leads to crucial insights and drives better decision-making that wouldn’t be possible without a data warehouse.

Amazon Redshift is currently being used successfully by many companies such as NASDAQ, Soundcloud, theSkimm and Clever to provide interactive analytics. As an extremely agile, heavily documented and well understood system with a large community base, knowing the strengths and weaknesses of the system allows architects and engineers to maintain it appropriately within their data infrastructure.

Operations

Data warehouses are architected to handle a large volume of data. In fact, many companies use data warehouses to store historical data going back at least three years—and this is a great practice when it comes to enriching information for a target persona or running product usage analysis.

Provisioning

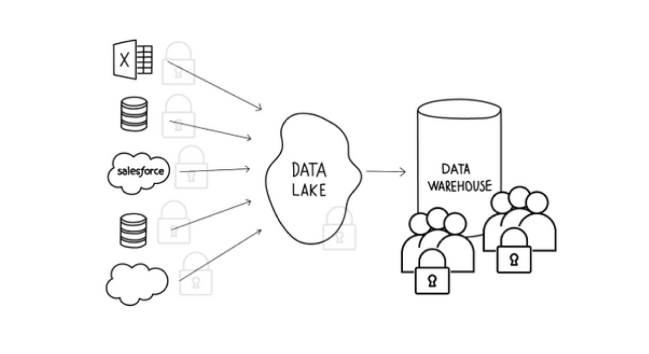

Data provisioning is the process of making data available in an organized and secure way to users and third-party applications that need access to the data.

According to its documentation, “an Amazon Redshift data warehouse is a collection of computing resources called nodes, which are organized into a group called a cluster. Each cluster runs an Amazon Redshift engine and contains one or more databases.”

In architecting your Amazon Redshift, you must first provision a cluster made up of one or more nodes, which is handled seamlessly by the table distribution styles. Currently, Amazon offers four types of nodes when setting up your Amazon Redshift, you’ll need to launch a cluster and Amazon automates the rest of the process. The number of nodes that you choose is based on the size of your dataset and query performance. Many Amazon Redshift users see cluster provisioning and choosing node types as a means of greater flexibility, allowing them to use different node types for different needs for optimal performance.

Because Amazon Redshift is architected to meet the needs of its users, Amazon provides an elasticity option of adding and subtracting nodes when necessary. Amazon users may want to resize a cluster when they have more data or they may want to size down their cluster if their business needs and data changes. Luckily, since Amazon automates the entire resizing process, it only takes a few minutes to complete the process.

Although a cluster is only available as read-only when resizing, Amazon allows users to run a parallel environment of the same data and continue to run analytics on the replicated cluster without impacting the production cluster or ongoing workload.

If your company is willing to build a data warehouse infrastructure upfront and are able to proactively think about data organization, then Amazon Redshift is the clear choice. With various types of nodes available with huge storage capacities, Amazon Redshift is a robust system that is used by enterprise-grade and scaling startups.

Loading

If you’re a current AWS customer Amazon has optimized methods for loading data into Amazon Redshift from the other AWS services that you’re already using.

The most optimized way users load their data is from Amazon S3 and then use the COPY command to parallel load into Amazon Redshift. In addition to Amazon S3 and Elastic MapReduce, you can also load data from DynamoDB, any SSH-enabled host or via PostgreSQL wire protocol into Amazon Redshift.

In loading data, Amazon Redshift ingest performance continues to scale alongside the size of your cluster. Thus, using the COPY command leverages the MPP architecture to read and load data in parallel from files in an Amazon S3 bucket.

Additionally, Amazon Redshift has a robust partner ecosystem with ETL (extract, transform and load) tools where they will push data from your production database into Amazon Redshift.

Maintenance

With a cloud-based data warehouse, there’s no physical infrastructure to manage, allowing for a streamlined focus on analytics and insights, rather than hours of manual maintenance. But, like any system, every data warehouse needs to undergo maintenance for a tune up from time to time.

As a data warehouse built with MPP concepts, Amazon Redshift requires periodic maintenance which makes the system run faster. However, this maintenance is fully taken on by Amazon Redshift and includes all facets of database management. From a performance perspective the ability to query, load, export, backup, restore and resize is parallelized for users.

Once in maintenance mode, Amazon Redshift monitors the health of a variety of components and failure conditions within an AZ and recovers from them automatically.

Another way Amazon Redshift performs maintenance is through the VACUUM feature, which is a command to remove rows that are no longer needed within the database and then sorts the data.

Running VACUUM is an optimal operation because it reclaims space and resort rows. Amazon Redshift allows its users to DELETE or UPDATE a table, this coupled with Amazon Redshift’s I/O minimization (only relevant data blocks are fetched)—this leads to optimal query performance. It’s important to note that running VACUUM is not required, particularly if Amazon Redshift is used in an append-only fashion.

The VACUUM command is a significant distinction between Amazon Redshift and its closest competitor, Google BigQuery. As Amazon Redshift sorts data to fetch only relevant data blocks, it forgoes Google BigQuery’s method of reading an entire table, which could potentially lead to degraded query performance.

For more information on how Amazon Redshift utilizes the VACUUM command, reference Amazon Redshift’s documentation.

Security

Cloud-based data warehouses require a flexible and powerful security infrastructure and operate with scalable requirements. Amazon Redshift, alongside its parent AWS ecosystem, take security very seriously and offer a secure way for users to encrypt their data.

As an Amazon Redshift user, you’re able to manage security for your data warehouse in a multitude of ways, including encrypting your workloads end-to-end to protecting access to your cluster, managing overall access to specific users and leveraging your own hardware security module (HSM, either on-premise or via AWS servers fully-managed service). For the purposes of this blog post, we’ll focus on a few:

-

Virtual Private Cloud (VPC): To protect access to your cluster by using a virtual networking environment, you can launch your cluster in an Amazon VPC.

-

SSL connections: To encrypt the connection between your SQL client and your cluster, you can use SSL encryption.

-

Cluster encryption: To encrypt data in all your user-created tables, you can enable cluster encryption when you launch the cluster.

Enterprise customers such as NASDAQ, Finra and NTT Docomo have relied on Amazon Redshift for years and have accumulated petabyte-scale because of its security and compliance. For a full list on how to manage security within Amazon Redshift, read their Security Overview documentation.

Cost

As data becomes more accessible and cost efficient—every business must leverage data for their advantage. With that, Amazon Redshift is a cost-effective solution for every company’s data warehousing needs, whether you’re a startup with a large of amount of data to a Fortune 500 company that has been accumulating data for years.

Having been on the market for some time, AWS has a large and mature ecosystem with more than 10 years of experience that offers a full suite of cloud computing services (EMR, RDS, Kinesis, S3, EC2) that has years over the Google Cloud Platform ecosystem. If you’re already invested in the AWS ecosystem, it’s cost effective to continue to purchase their services.

Amazon Redshift offers on-demand pricing (see below) and several Reserved Instance (RI) options of up to 75% discounted over on-demand pricing. This pricing model leaves users with the ability to construct their data warehouse according to their business needs.

With on-demand pricing, Amazon Redshift charges per-hour per-node, which includes both compute and storage. This pricing model is predictable as users are able to run as many queries as necessary without being penalized by a high cost.

Pricing starts at $0.25 per hour for 160GB of data. A cluster of 8 X dc1.large nodes costs $1,440 for a 30-day month. Additionally, users can purchase two nodes (users get the leader node for free, so in total there are three nodes) for $360 for a 30-day month.

However, it’s important to note that since Amazon Redshift has fixed compute (SSD) and storage (HDD), scaling one requires scaling the other and therefore attributes to overall cost.

Conclusion

Amazon Redshift offers a more mature, agile and standard data warehouse with all the high-performing capabilities of a cloud-based data warehouse with tons of documentation, cost-effectiveness, flexibility and use cases.

For and in-depth use case how to leverage Amazon Redshift to predict projected revenue and compare the data warehouse to its leading competitor, Google BigQuery, download our white paper What to Consider When Choosing Between Amazon Redshift and Google BigQuery.