Building a Data Stack: An Overview on Google BigQuery

Posted by on December 20, 2016 Data Stack, Data Analytics

With everything seemingly moving towards the cloud, it was only a matter of time that data warehousing followed suit and into the cloud. In the past few years, cloud-based and serverless data warehouses have cropped up and the market for it is incredibly competitive. As cloud-based systems become more cost-effective, high-performing and overall appealing to a diverse set of customers, it’s important to analyze their strengths and weaknesses.

In 2012, the Internet giant Google publicly beta launched Google BigQuery, a data warehouse within the Google Cloud Platform ecosystem, at their Google I/O conference. This launch marked Google’s entry into the data warehouse competition against leading competitor Amazon Redshift.

Google BigQuery was initially created by developers as an internal tool called Dremel. Since launch, Google has made significant changes upon the internal tool and created a data warehouse that is used by both large enterprises as well as smaller companies. Google BigQuery takes full advantage of Google’s infrastructure and processing power and is an append-only system.

In this blog post, we’ll provide a high-level analysis pertaining to seven important functionalities and capabilities to Google BigQuery’s data warehouse. Whether you’re evaluating data warehouses for the first time, performing a competitive advantage or looking for a cloud-based solution, you can use this as a reference point. As a high-level analysis, we’re focusing around the key areas of performance, operations and cost, as we believe these are the crucial elements for your evaluation process.

Google BigQuery Overview

There’s no one-size-fits-all data warehouse, however today’s cloud-based data warehouses are serverless, easy to manage and will scale alongside your company and voluminous data. Here’s a quick look at Google BigQuery’s system:

-

A fully-managed petabyte-scalable systems

-

Leverages parallel processing

-

Leverages columnar storage

-

Geared towards interactive reporting on large data sets

-

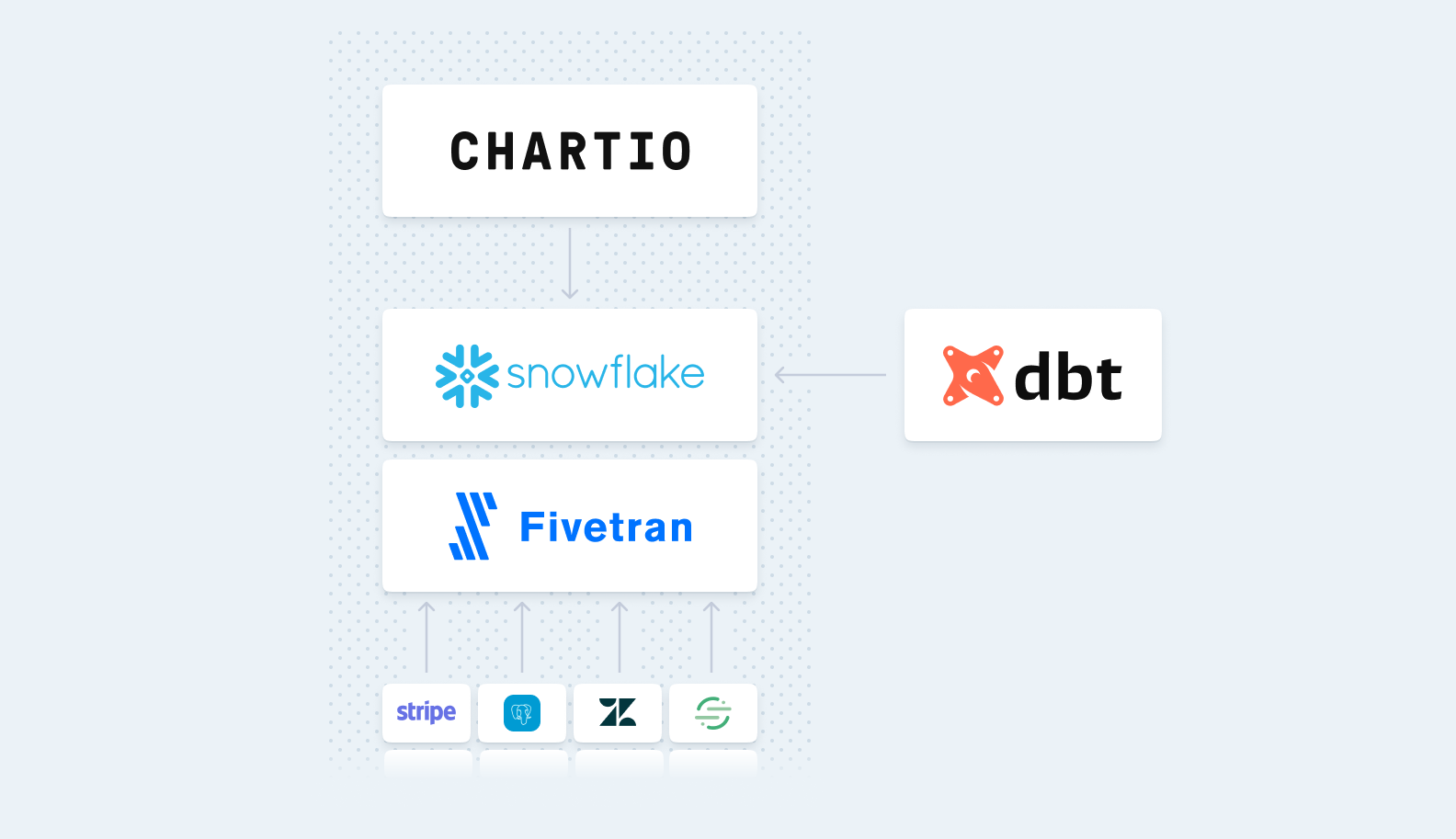

Supports integrations and connections with various applications, including Business Intelligence tools

Performance: Throughput and Concurrency

Even though today’s leading data warehouses are cloud-based systems, that doesn’t mean that they don’t run into any sort of limitations. We’re focusing our performance analysis on throughput and concurrency, as these are the major bottlenecks when companies look into interactive reporting for the voluminous data set.

Throughput

Throughput is the speed at which a data warehouse can perform queries. For those evaluating a data warehouse, throughput is a key area of consideration because customers want to ensure that they’re investing time, resources and money into a system that will adapt and perform to their needs.

Similarly, Google BigQuery relies on Dremel and Colossus (the successor to the Google File System) for its storage, compute and memory. These systems are then tied together by Google’s Petabit Jupiter Network, which allows every node to communicate with other nodes at 10G. In leveraging this architecture, one of the major benefits is bottleneck-free scaling and concurrency.

In March 2016, Google BigQuery released Capacitor, an updated and state-of-the-art storage format system within the data warehouse. Capacitor is designed to take an opinionated approach to storage in allowing background processes to constantly evaluate customers’ usage patterns and automatically optimized these datasets to improve performance. This process is entirely automated and transparent for end users, which allows queries to run at a faster rate because it adapts to a user’s Google BigQuery environment. For further information on Google BigQuery’s throughput, you can read their Medium post.

Concurrency

Every data warehouse has concurrency limitations, or the maximum number of queries you can run simultaneously without leading to slowness in generating interactive reports. Ideally, all queries could operate without any contention for resources, under the hood, every data warehouse has resource constraints and thus practical limits on concurrent workload capabilities.

On the other hand, Google BigQuery isn’t immune to the 50 query concurrency limit. Although not a traditional MPP database, Google BigQuery still uses MPP concepts, only on a much larger scale. It introduces a few innovations that workaround the MPP limits, in particular concurrency limits:

-

Separating compute and storage: Historically, MPP systems couple compute and storage in order to avoid the performance hit of fetching data across the network. However, this limits the system’s ability to scale compute up and down based on the number of queries being processed. Google BigQuery separates compute and storage so that the system as a whole can scale compute to match the current query load at the cost of potentially increased network traffic for any particular query.

-

Using an order of magnitude of more hardware: Unlike a traditional MPP system where administrators would have to plan the number of compute nodes a cluster should have ahead of time, Google dynamically allocates a varying number of servers. Where Amazon Redshift has a node limit of 128, Google is potentially using thousands of servers to process the query.

A downside to having more hardware means that Google provides this feature to all its users, meaning it’s a shared, multi-tenant system with other Google BigQuery users.

At the end of the day, throughput and concurrency impact one another and both impact the overall performance of a data warehouse. For users, they all want the same thing: a highly performant data warehouse that will meet their needs and allow everyone to access data.

Google BigQuery although used by enterprise sized companies such as The New York Times, Spotify and Zulily to provide flexible analytics at scale lacks the robust documentation and community that follows Amazon Redshift, which can make it a bit difficult to resolve issues when they appear. Though in the past few months Google has been working to improve Google BigQuery, particularly around cost transparency and moving towards Standard SQL.

Operations

In separating compute and storage, Google BigQuery is able to run super-fast queries against multiple terabytes of data in seconds. For data warehouse, the speed at which they’re able to operate is crucial to getting interactive reporting and fast insights. In this section, we’ll analyze how Google BigQuery handles provisioning, loading, maintenance and security.

Provisioning

Data provisioning is the process of making data available in an organized and secure way to users and third-party applications that need access to the data.

For Google BigQuery, data is provisioned into tables and queries are run from those tables directly. Unlike it’s leading competotor, Amazon Redshift, configuring clusters and nodes is not written in their architecture.

According to Google’s blog post, “We have customers currently running queries that scan multiple petabytes of data or tens of trillions of rows using a simple SQL query, without ever having to worry about system provisioning, maintenance, fault-tolerance or performance tuning.”

As of September 2016, Google recently released a flat-rate pricing model giving high-volume, enterprise customers a more stable monthly cost for queries rather than an on-demand model of data processed. The flat-rate pricing starts at a monthly cost of $40,000 with an unlimited amount of queries. It’s worth noting that with this pricing model, storage is priced separately.

For many smaller companies, forking over $40,000 per month for unlimited queries may not be an option. While provisioning data may seem easier in Google BigQuery, it’s at the cost of limited predictability in monthly pricing and holding a quota threshold to manage a cost effective system.

However since Google BigQuery has chosen to forgo organization because they’re able to have an order of magnitude of hardware that is unlike their competitors. For companies with an incredibly large volume of data, Google’s approach to non-provisioning is alluring.

Loading

Another key feature of data warehouses is its mechanism for loading data from the operational database into your preferred data warehouse. The evaluation process of data loading should include speed, accessibility and latency once data is loaded into the data warehouse.

As a Google Cloud Platform customer, data loading between Google platforms is already built-in. According to Google documentation, users can load data directly from a readable data source and by inserting individual records using streaming inserts.

An advantage for customers using Google BigQuery is the ability to have federated access to data sources. Meaning, customers can store their data in Google Cloud Storage or Google Sheets and query from those data sources within Google BigQuery—without having to load the data into the data warehouse first.

Further, there are a few differences when using Google Analytics. Once your website’s data grows to such a large volume, Google will limit your access of data to a sample set, thus limiting your ability to run performant queries. As a workaround, users can upgrade their Google Analytics account to Premium at the flat-rate annual cost of $150,000 and included in this upgrade is an automatic sync from Google Analytics into Google BigQuery.

Maintenance

As aforementioned, Google has managed to solve a lot of common data warehouse concerns by throwing order of magnitude of hardware at the existing problems and thus eliminating them altogether.

Since Google BigQuery does not require data provisioning, maintenance is much less of an issue because it’s not a requirement for the system to be performant. The advantages to not requiring maintenance is the flexibility of having your data available at all all times without periodic maintenance. However, a potential downside to not requiring maintenance is that users are unable to remove or resort irrelevant data, which can lead to a higher cost since Google BigQuery charges by data processed.

However, Google has implemented ways in which users can reduce the amount of data processed:

-

Partition their tables by specifying partition date in their queries

-

Use wildcard tables to shard their data by an attribute

In a Google blog post titled BigQuery Under the Hood, it states, “The ultimate value of BigQuery is not in the fact that it gives you incredible computing scale, it’s that you are able to leverage this scale for your everyday SQL queries, without ever so much as thinking about software, virtual machines, networks or disks.”

Keep in mind that by design, Google BigQuery is append-only. Meaning, when planning to update or delete data, you’ll need to truncate the entire table and recreate the table with new data.

While Google BigQuery may have instances where it is arguably faster than Amazon Redshift—it sacrifices organizational structure in favor of no maintenance. However, Amazon Redshift customers have appreciated its ability to optimize cost and performance for their use case.

Security

Cloud-based data warehouses require a flexible and powerful security infrastructure and operate with scalable requirements. Amazon Redshift, alongside its parent AWS ecosystem, take security very seriously and offer a secure way for users to encrypt their data.

Much like the entire Google Cloud Platform, Google BigQuery also encrypts all data at rest by default. Data encryption is a process that takes readable data as input and transforms it into an output that reveals little to no information about the input.

For the Google Cloud platform ecosystem, encryption at rest reduces attacks and allows systems, like a Business Intelligence tool, to manipulate data for analysis without providing access to content.

For the Google Cloud Platform, “encryption at rest reduces the surface of attack by effectively ‘cutting out’ the lower layers of hardware and software stack.” For a full overview on Google Cloud Platform’s Security and Compliance, read their documentation.

Cost

Another selling point for cloud-based data warehouses is that they’re cost-effective alternatives to legacy, on-site systems. According to Google BigQuery documentation, “BigQuery charges for data storage, streaming inserts, and for querying data, but loading and exporting data are free of charge.”

Since Google BigQuery is able to separate compute and storage, it allows for an extremely flexible pay-as-you-use pricing model (it charges by GB usage, starting at $0.02 per GB per month) This has allowed companies with smaller data sets to experiment with a data warehouse without running up a large purchase order. However, it’s critical to note that running queries is a separate cost from storing data.

For Google BigQuery, query pricing is the cost of running your SQL commands and user-defined functions and changes by the number of bytes processed. Query pricing has three tiers: 1TB for free, $5 per TB for on-demand tier and $40,000 for 2,000 queries for flat-rate pricing.

Though Google has been transparent that flat-rate pricing is aimed for the enterprise, Google BigQuery does offer a handful of free capabilities including: loading data, copying data, exporting data and metadata operations.

A downside to the pay-as-you-use model is that pricing is less transparent and hard to predict for long-term budgeting. Users have stated that complex analytics and query errors can end up in an unexpected cost. However, BigQuery now provides a Cost Control feature that enables users to set a cap to daily costs. As the Google BigQuery administrator, you’ll proactively have to manage the daily quota querying and once the cost effective query limit has been reached, they’ll have to communicate it with their team and cease performing queries until the cost has rebalanced.

Conclusion

With Google BigQuery rapidly growing in popularity with scaling companies, it still has its own hurdles in pricing transparency. However, it does excel in terms of getting your data warehouse off the ground and running quickly without having to provision appropriately.

For and in-depth use case how to leverage Google BigQuery for personalized sales pitches and compare the data warehouse to its leading competitor, Amazon Redshift, download our white paper What to Consider When Choosing Between Amazon Redshift and Google BigQuery.