Data Wars Episode I: The Democratization of Data

Posted by on August 23, 2017 Data, Data Analytics

Last week I came across two challenging data stories.

In the first one, a Director of a business team at a mid-size company (we’ll call them Gated Co.) was explaining the process to get access to a new data report. The company was using a centralized approach to data analysis with a single data team catering to the needs of all business teams. The process went as follows:

- The user files a ticket to the data team

- The data team responds to the ticket with an SLA of one week

- The report is then built and delivered with an average timeframe of four to five weeks

As you might imagine, after several weeks, it’s likely that the need for that data and subsequent report was already obsolete and failing to render business insights in a timely fashion.

In the second story, an Executive of a 150-person startup (we’ll call them Messy Co.) was discussing how his company was using a decentralized approach to data analysis with everyone having access to data and the ability to explore and create their own reports.

And, although it was great that people had access to data and were actively analyzing it, the Executive still expressed his frustration. The company had no data governance process in place, or even oversight on what data was used and how it was processed to create a report, and things were getting chaotic and bordering data anarchy.

The Executive shared an anecdote of five different people walking into the same meeting to discuss a metric, and each meeting attendee had their own way of calculating the same metric and had a different value for it.

These stories highlight two inflection points in the wide spectrum of data maturity we’re seeing with our customers and prospects. And the prevailing question remains: who owns the analysis and understanding of data?

Is it a centralized team of Data Analysts? Or can we effectively and efficiently decentralize access to data analytics to everyone in the organization?

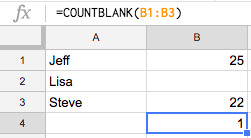

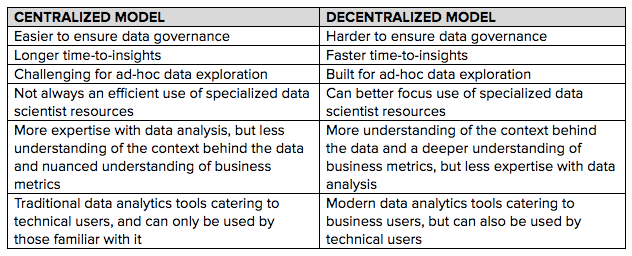

While there are pros and cons to implementing a centralized or decentralized analytics model, it’s important to understand the differences between the two.

Centralized vs. Decentralized Analytics Model

The concept of data democratization is very real today and an increasing number of companies are eager to embrace the vision of decentralized model for data analytics. But the transition from a controlled, centralized model to an open, decentralized one is far from straightforward.

These challenges in transitioning from centralized to decentralized take many forms:

It can be cultural in that companies are struggling with building data literacy across the organization.

It can be political in that business teams struggle with dependencies on data scientist teams.

It can be procedural in that data scientists want to enable some analytics capabilities for their business users, but there is not an easy way to do so.

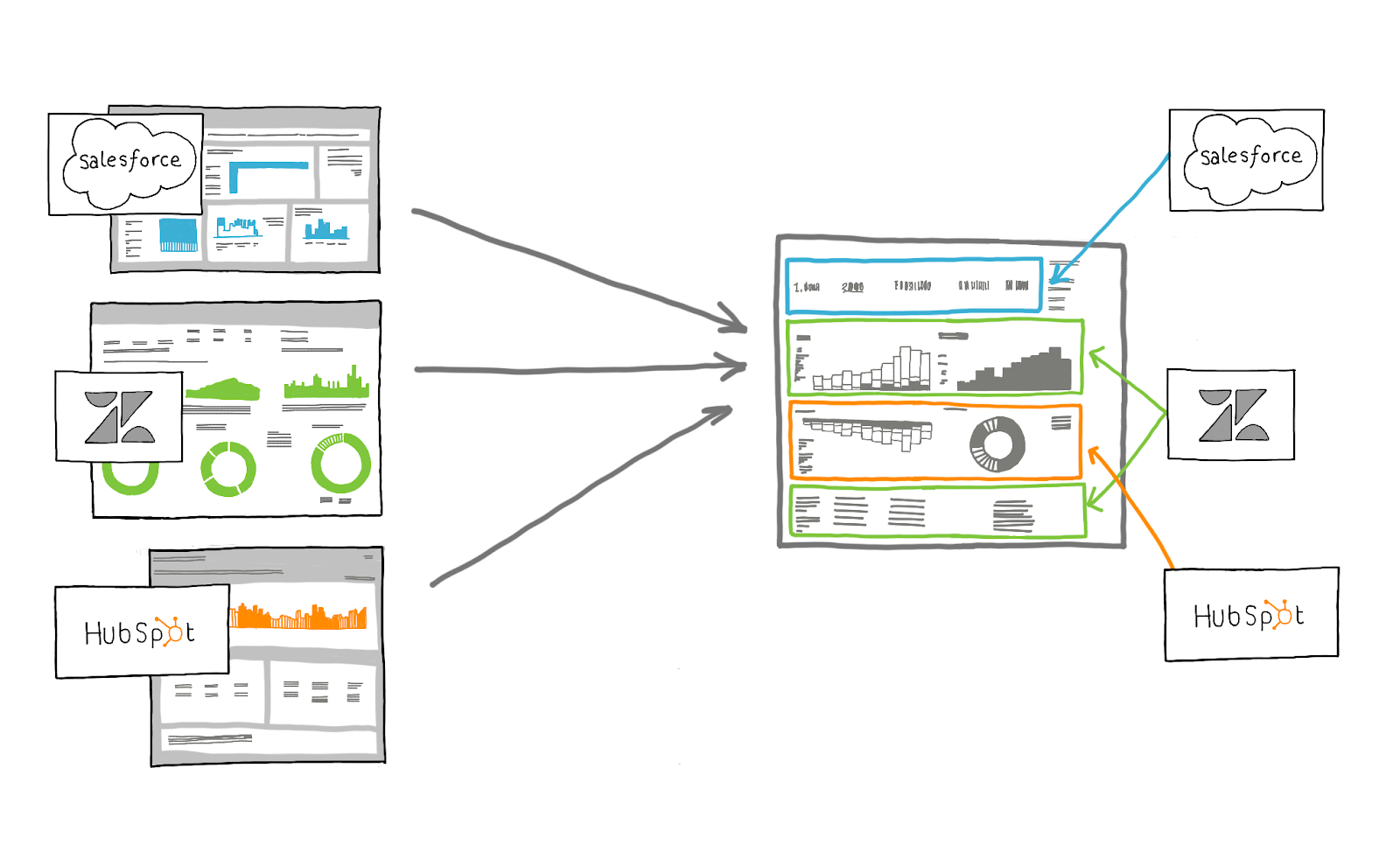

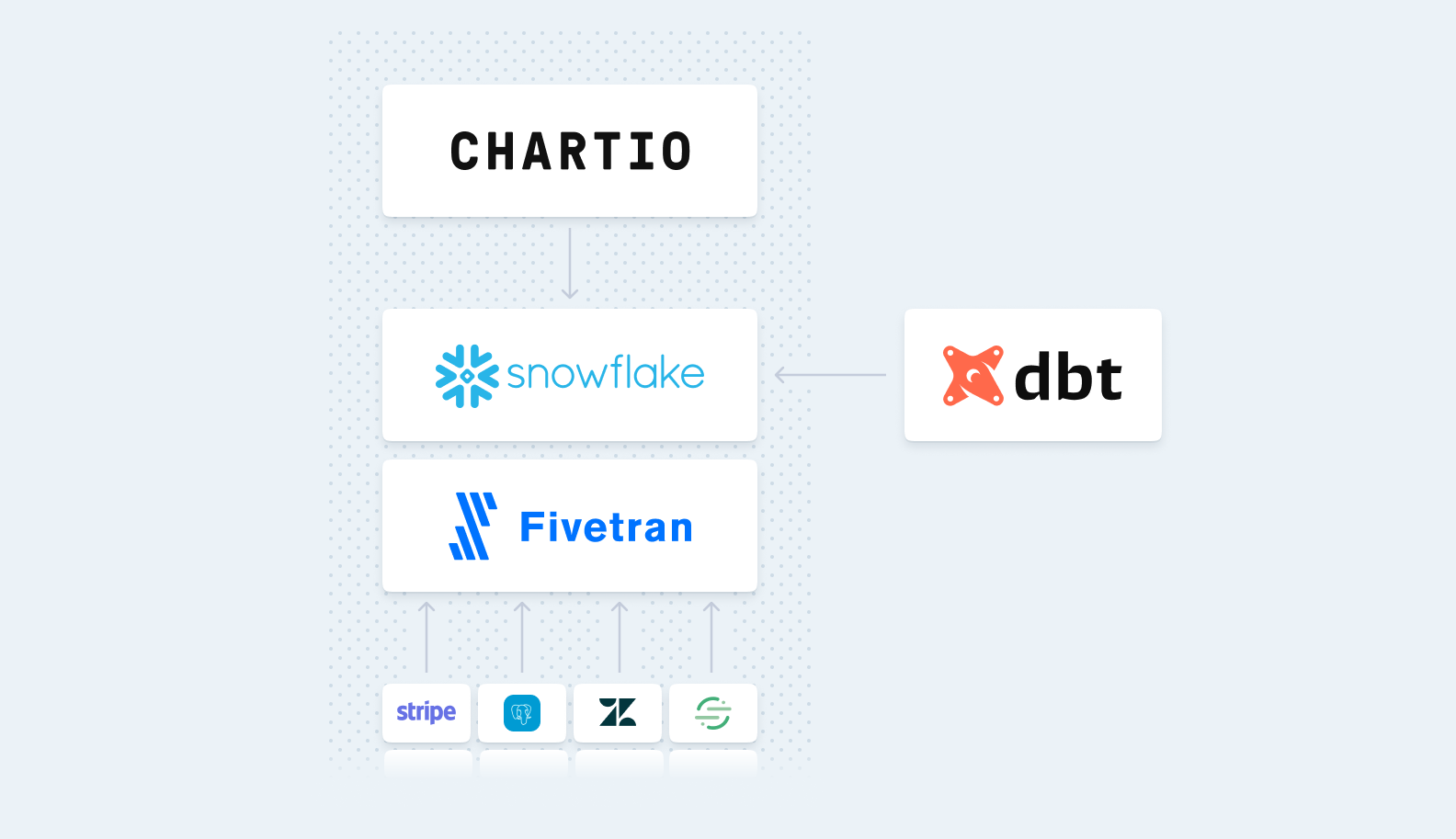

It can be technological in how different Business Intelligence tools are trying to balance power and usability to allow for technical users to set things up, but also for business users to use in the context of their particular function.

It can be due to resources in that you may not have the talent to either govern, query or organize your data. Data Scientists and Data Analysts are in high demand and many teams are constrained by their availability.

At the end of the day, there are a lot of different factors going into choosing your analytics model: data size, company culture, organizational readiness, resource availability, tools, etc. The right strategy really depends on your business, and what your business can handle. With that, here are some learnings on both centralized and decentralized analytics we have come across with our customers here at Chartio. Because analyzing data is ultimately better than not analyzing data.

Top 3 Tips for Centralized Analytics

1. Clear communication processes between business teams and analysts

With a centralized analytics approach where Data Analysts support business teams, it’s important to have clear communication between the analysts and business teams. This communication includes documenting data requests and implementing a prioritized methodology to address them.

2. Balance of preparing data and enabling business users to get to their insights

For a company like Gated Co., the data team needs time to mine the data and create the report that will deliver timely insights to their business team. To ensure that the centralized analytics approach continues to work, it’s all about managing expectations between the data and business teams.

3. Use the right technology for the job

There are many tools on the market that are designed specifically for centralized analytics. These tools often skew towards more technical users and can really be of value to those familiar with it.

Top 3 Tips for Decentralized Analytics

1. Strategically think about data governance

Think of data governance as quality control for your company’s data. It’s a set of processes that ensures data is formally managed and up to standards. With that, it’s crucial to have both data and business teams aligned on data governance. If you don’t, your data can become a large mess as we saw at Messy Co.

2. Make complex data analysis capabilities accessible by business users

Through a decentralized analytics approach, everyone has the ability to access and analyze their data. With that comes faster time to insights, more question asking and answering about business metrics and an increase in overall productivity—across the entire company. With that, programs to build data adoption, literacy and training across the organization become important as now everyone in the organization can do some truly sophisticated analyses.

3. Use the right technology for the job

A new breed of Business Intelligence tools are tackling the proverbial challenge of making a hard thing easy for everyone. Easy connectivity to data sources, no need for elaborate modeling, visual-based exploration and an interactive user interface are some of the key features that can help everyone have equal access to data and a single source of truth.