How to Load Data into Amazon Redshift or Google BigQuery

Posted by on December 1, 2016 Data Governance, Data Analytics

[Editor’s Note: This is our fourth installment in our “Data Warehouse Blog Series.” In our previous installment, we analyzed how Amazon Redshift and Google BigQuery handle data provisioning. Click here to read our previous post How Amazon Redshift and Google BigQuery Handle Provisioning. ]

In evaluating a data warehouse, it’s important to consider how data loads from your database into the data warehouse. Second, it’s critical to also consider the speed, accessibility and latency once data is loaded into the data warehouse.

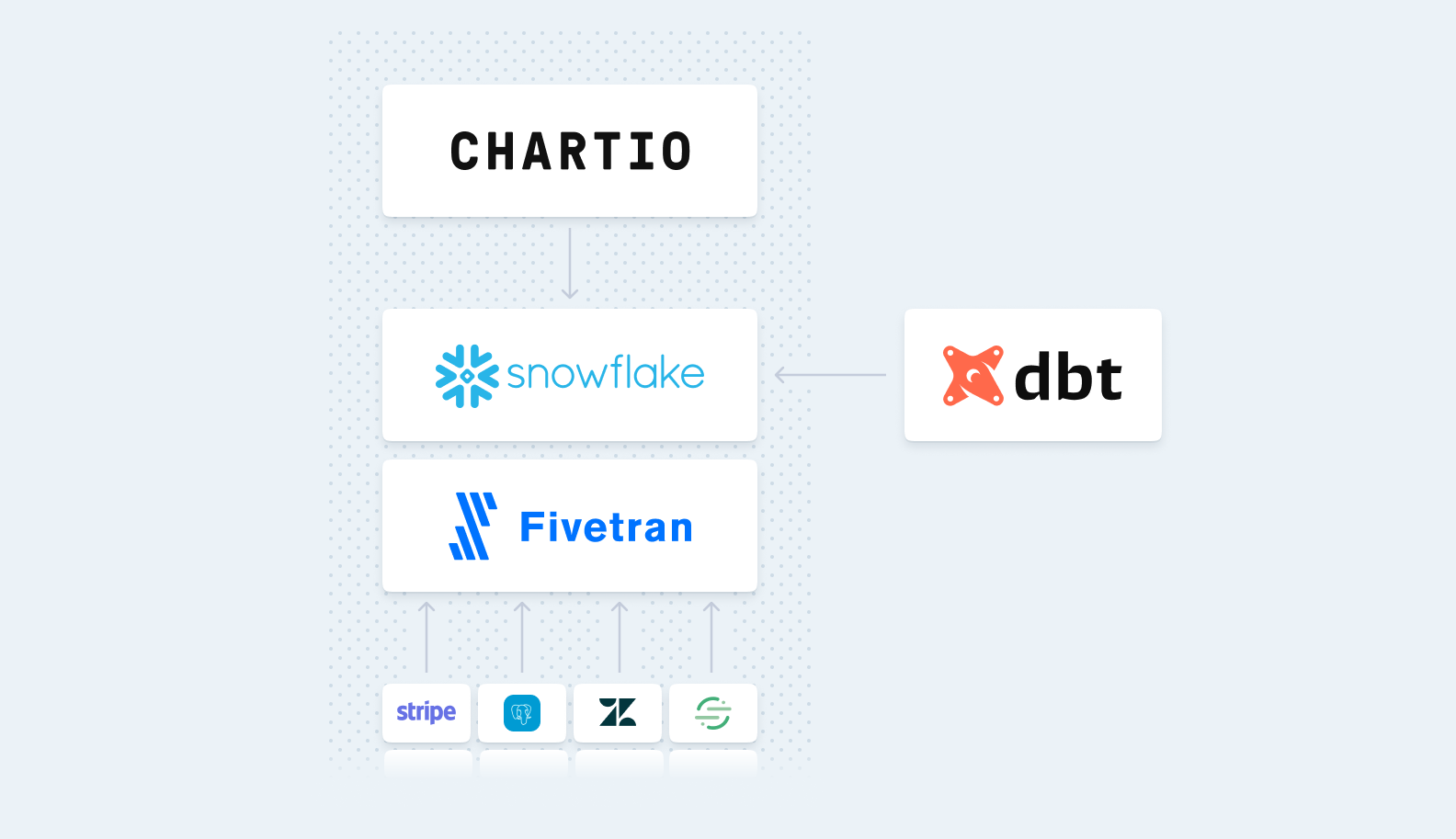

Much like how cloud-based data warehouses revolutionized warehousing, a solution to data loading can be found through an ETL service via companies such as Treasure Data, Segment, Stitch, or an in-house built ETL. However, there are differences in loading data into Amazon Redshift and Google BigQuery.

Amazon Redshift

If you’re a current AWS customer Amazon has optimized methods for loading data into Amazon Redshift from the other AWS services that you’re already using.

The most optimized way users load their data is from Amazon S3 and then use the COPY command to parallel load into Amazon Redshift. In addition to Amazon S3 and Elastic MapReduce, you can also load data from DynamoDB, any SSH-enabled host or via PostgreSQL wire protocol into Amazon Redshift.

In loading data, Amazon Redshift ingest performance continues to scale alongside the size of your cluster. Thus, using the COPY command leverages the MPP architecture to read and load data in parallel from files in an Amazon S3 bucket.

Additionally, Amazon Redshift has a robust partner ecosystem with ETL (extract, transform and load) tools where they will push data from your production database into Amazon Redshift.

Google BigQuery

Likewise, if you’re a Google Cloud Platform customer, data loading between Google platforms is already built-in. So, if you’re already a customer of the Google ecosystem, loading is quite seamless from data source to Google BigQuery. According to Google documentation, users can load data directly from a readable data source and by inserting individual records using streaming inserts.

An advantage for customers using Google BigQuery is the ability to have federated access to data sources. Meaning, customers can store their data in Google Cloud Storage or Google Sheets and query from those data sources within Google BigQuery—without having to load the data into the data warehouse first.

Further, there are a few differences when using Google Analytics. Once your website’s data grows to such a large volume, Google will limit your access of data to a sample set, thus limiting your ability to run performant queries. As a workaround, users can upgrade their Google Analytics account to Premium at the flat-rate annual cost of $150,000 and included in this upgrade is an automatic sync from Google Analytics into Google BigQuery.

Conclusion

If you’re already heavily invested in one ecosystem—whether it’s AWS or Google Cloud Platform—it’s most cost effective to remain a current customer of the said ecosystem. AWS and Google Cloud Platform are platform businesses who offer a variety of systems and are marketed as a consolidator of tools into a single vendor.

Additionally, should you choose to move data warehouses, it’s important to note that it’s going to be difficult to get your data out of Amazon Redshift and into Google BigQuery and vice versa.

In our next installment, we’ll analyze maintenance between Amazon Redshift and Google BigQuery. For immediate action in choosing a data warehouses, download our white paper What to Consider When Choosing Between Amazon Redshift and Google BigQuery now.